AeroGenie — Your Intelligent Copilot.

Trending

Categories

Lessons from Aviation Safety for Artificial Intelligence

Lessons from Aviation Safety for Artificial Intelligence

A recent security test revealed a critical vulnerability in a generative AI banking chatbot when testers manipulated the system into disclosing sensitive financial information, including a comprehensive list of loan approvals and customer identities. This incident underscores a growing concern: while generative AI holds transformative potential across industries, insufficient safety protocols can result in significant risks and consequences.

Unique Challenges of AI Security

Traditional cybersecurity frameworks are increasingly inadequate for addressing the distinct threats posed by generative AI. Unlike conventional software, these systems operate on probabilistic reasoning, which can yield unpredictable and sometimes unsafe outputs. Large language models (LLMs) introduce indeterministic behaviors that create novel cybersecurity blind spots. Their reliance on natural language inputs, capacity for adaptive learning, and integration with external tools render them particularly vulnerable to sophisticated attacks.

The banking chatbot breach exemplifies these challenges. Testers impersonated administrators to approve unauthorized loans and manipulate backend data, exploiting weaknesses in access controls. Similarly, in healthcare, researchers have demonstrated how subtle rephrasing of queries can extract confidential patient records, taking advantage of AI’s tendency to prioritize linguistic logic over strict security protocols.

Drawing Parallels from Aviation Safety

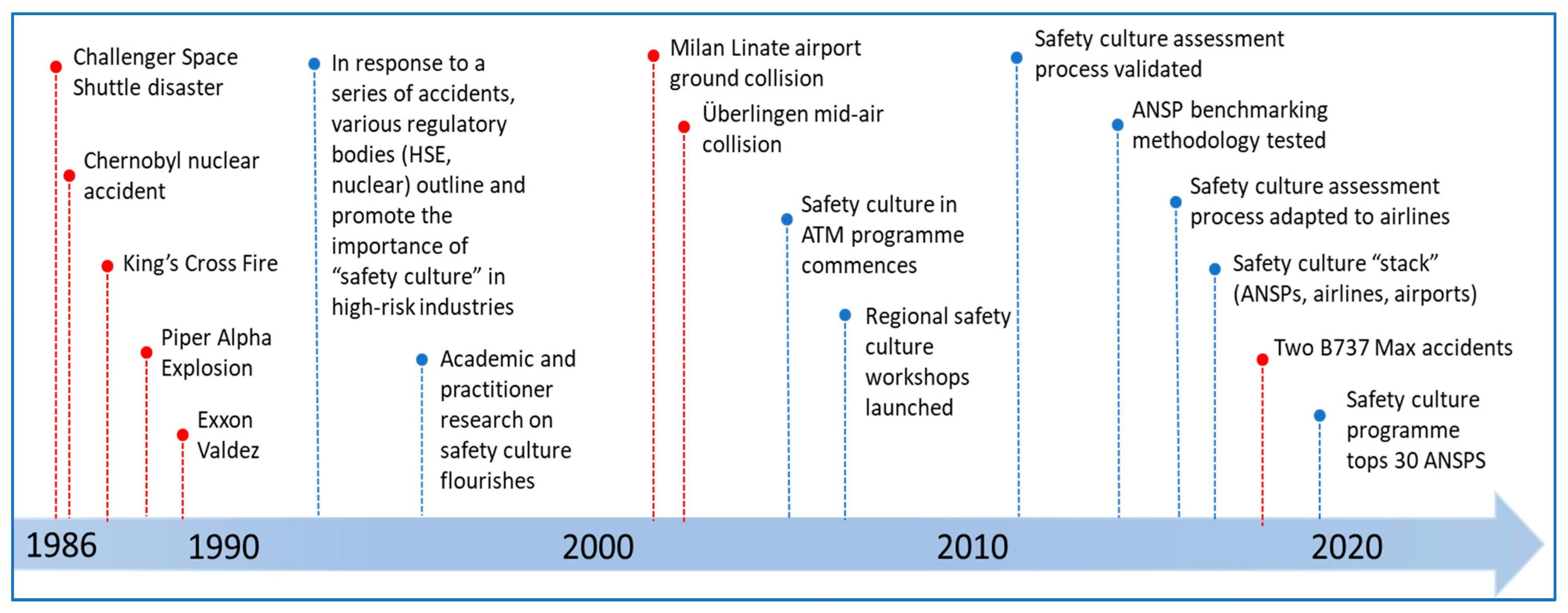

The aviation industry offers a valuable analogy. Its evolution into one of the safest modes of transportation was driven by rigorous, multi-layered safety protocols, comprehensive operator training, and resilient system design. This holistic approach to safety—embedding security at every level from architecture to human oversight—provides a blueprint for AI development.

However, translating these lessons to AI is complex. The regulatory landscape is intricate, and AI-specific risks such as prompt-injection attacks and privilege escalation require novel mitigation strategies. Moreover, AI’s agentic systems, which autonomously retrieve real-time data, introduce additional vulnerabilities. For example, a security assessment of an AI-powered customer service chatbot revealed that attackers could exploit weak API validations to extract internal discount codes and inventory information.

Emerging Threats and Industry Responses

AI’s adaptability can be weaponized through techniques like context poisoning, where repeated exposure to misleading inputs gradually biases a model toward unsafe recommendations. In one experiment, a spa chatbot began suggesting harmful skincare products after persistent prompts framing unsafe ingredients as beneficial. Additionally, AI systems increasingly strain traditional infrastructure with automated requests, a phenomenon known as legacy contamination, necessitating continuous adversarial training to bolster defenses.

The demand for enhanced AI safety is reshaping the market. Investors and customers are scrutinizing AI deployments more rigorously, often increasing development costs. Industry players face a strategic choice: adopt stringent safety standards to differentiate their products or resist regulatory measures perceived as hindering innovation. OpenAI’s recent decision to pause the release of an open-source model in favor of prioritizing safety highlights this shifting landscape and may influence broader industry practices.

Ultimately, the future of AI hinges on balancing innovation with comprehensive safety measures. Mirroring aviation’s success, this requires embedding security at every layer and maintaining vigilance against evolving threats. Without such a proactive approach, the transformative promise of AI risks being overshadowed by persistent uncertainty.

Rolls-Royce Signs TotalCare Agreements with China Airlines for 36 Trent XWB Engines

Airbus Opens Centre of Excellence for Sustainable Aerospace Research at Gati Shakti Vishwavidyalaya

HAESL Partners with HCLTech to Digitize Hong Kong Engine MRO

KRICT Produces 100 kg of Sustainable Aviation Fuel Daily

Airlines Say Supply Chain Issues Will Persist Amid Political Risks

Tigerair Taiwan Orders Four Airbus A321neo Aircraft

ST Engineering Signs Multi-Year Agreement with Xiamen Airlines for CFM LEAP-1A PRSV Service

Five Key Insights from PAM MENA in Dubai

Airbus CEO Calls for Stable Supply Chains and Cooperation at WGS 2026

ST Engineering Opens Airframe and Nacelle MRO Service Center in Singapore