AeroGenie — Your Intelligent Copilot.

AeroGenie. Ask Your Aviation Data Anything.

June 11, 2025

Introduction

Accessing complex aviation data should be as simple as asking a question. AeroGenie is an advanced aviation-domain natural language-to-SQL system that allows analysts and executives to query vast aviation databases by simply phrasing questions in everyday language. Built by a ePlane AI team of MIT-level engineers with assistant product experience, AeroGenie bridges the gap between human language and aviation data, translating ambiguous questions into precise SQL queries on the fly. The result is an experience akin to conversing with a smart colleague or voice assistant, except this “colleague” instantly writes perfect SQL and retrieves the answer — even from thousands of aviation records — in under a second.

The challenge of natural language querying in aviation is formidable. Real-world databases contain hundreds of interrelated tables, obscure column names, and domain-specific jargon. General large language models (LLMs) have demonstrated the ability to generate SQL for simple examples [source], but their accuracy typically tops out around 85–90% on complex benchmarks [source]. In practice, performance can drop dramatically without domain tuning – one internal study found a state-of-the-art model achieving only ~51% accuracy on realistic enterprise queries, despite 90%+ on standard tests [source]. The reasons are clear: the model must grasp industry-specific context, correctly interpret user intent, and handle specialized SQL schemas. If not given the proper schema knowledge, an LLM may even hallucinate table or column names that don’t exist [source] – a fatal flaw in mission-critical analytics. AeroGenie was engineered to overcome these challenges through rigorous domain-specific training and a novel retrieval-augmented architecture.

Key Capabilities of AeroGenie:

- Domain-Trained Intelligence: Fine-tuned on 600,000+ aviation-specific Q&A pairs, giving it deep understanding of aviation terminology, metrics, and data relationships (e.g. aircraft performance, flight schedules, maintenance logs). This extensive domain corpus ensures the model interprets nuanced queries correctly and uses the right dataset context.

- Custom LLM Ensemble: Built on three custom LLM variants working in concert. A primary model was fine-tuned on 300,000 labeled NL-SQL examples, and a secondary model was trained on 250,000 pairs for a proprietary re-ranking algorithm that evaluates and refines the SQL output. This ensemble approach yields exceptionally accurate queries – recently demonstrating 98.7% accuracy on 73k validation samples, with a 0.086 training loss and 0.073 validation loss (indicating excellent generalization). AeroGenie has been benchmarked on 100,000+ real aviation questions, SQL queries, and their results to verify its reliability.

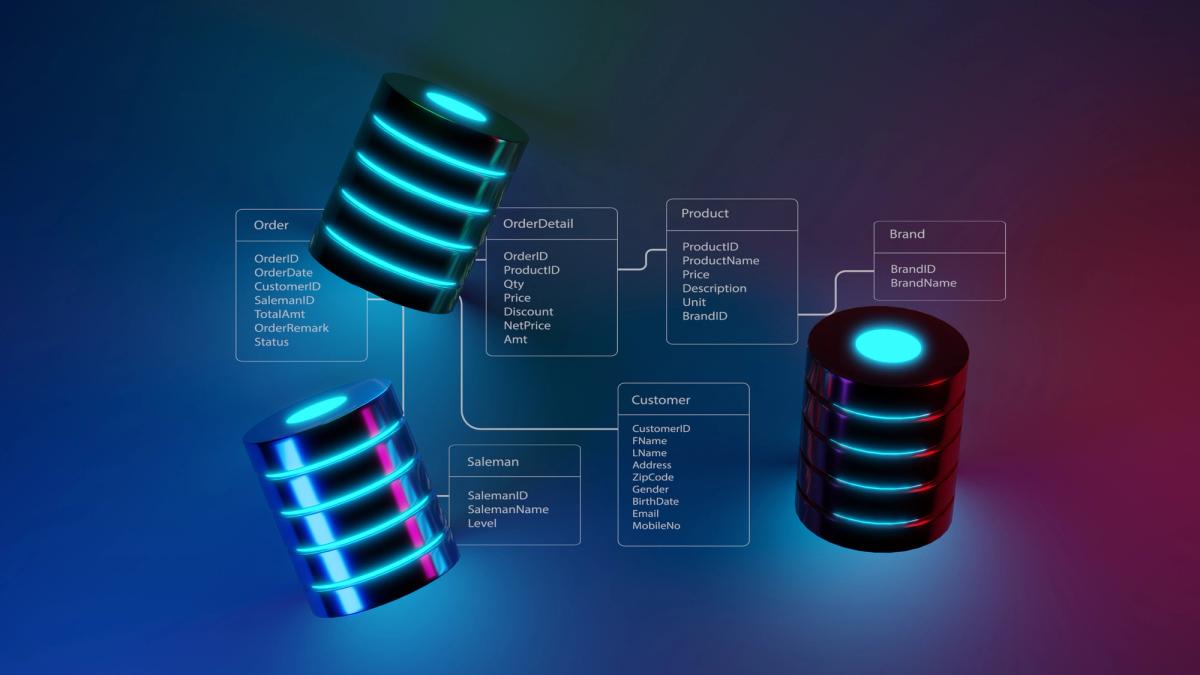

- Semantic Search over 1,100+ Tables: The system is precision-trained to handle massive schemas – over 1,100 aviation tables and 46,000+ columns. An embedding-powered semantic search stack (using Redis for kNN vector similarity and a custom domain-specific embedding model) quickly narrows down the relevant tables and columns for each query. This vector search acts as the system’s memory of the schema, ensuring that even in a sprawling data environment, the model focuses only on the pertinent subset of data. By tuning the retrieval for ultra-high precision, AeroGenie can pinpoint the exact relevant columns among tens of thousands, avoiding the confusion or errors that plague generic NL-to-SQL systems.

- Sub-Second Responses with Context Optimization: AeroGenie’s architecture is optimized for speed. It uses an ultra-fast short-term memory for query context and in-memory vector indices to fetch schema information in milliseconds. The retrieval-augmented generation design means the model only deals with a small, relevant context window for each query, enabling interactive, real-time querying. Users receive answers (or SQL code) almost instantly, comparable to the responsiveness of modern voice assistants.

- Private Deployment & Rich Outputs: AeroGenie is deployed directly on the client’s infrastructure – next to the database – adhering to private-by-design principles. No data ever leaves the organization’s environment, a critical factor for aviation companies with strict data security requirements. The system generates SQL queries instantly and can optionally produce comprehensive PDF reports with customizable visualizations (over 100 chart types supported, from time-series line charts to geospatial maps). Non-technical users can ask a question and receive a ready-made report, while technical users can copy the exact SQL for use in BI tools or dashboards (e.g. to update a Power BI report) as needed. This flexibility empowers both executives and data scientists: ask questions in natural language, get answers in the format you need.

Domain-Focused Training for Aviation Data

At the core of AeroGenie’s prowess is its extensive domain-specific training. General-purpose AI models often falter in specialized fields like aviation because they lack exposure to industry terminology and context. AeroGenie addresses this by training on half a million aviation Q&A pairs, drawn from real operational queries, industry reports, and curated datasets. These include questions about flight operations, maintenance records, safety statistics, supply chain and inventory, airline performance metrics, and more – each paired with the correct SQL or result. By learning from such a large and relevant corpus, the model develops an almost encyclopedic understanding of how aviation professionals ask questions and how those map to database fields.

This training regimen means AeroGenie knows, for example, that a query about “average block time of narrow-body aircraft in winter” likely involves the flight_segments table and a specific “block_time” column, filtered by aircraft type and date – rather than guessing or hallucinating. In fact, insufficient schema knowledge is the most common cause of failure in NL-to-SQL systems, as models otherwise invent column names or join tables incorrectly [source]. AeroGenie’s training embeds the real schema usage patterns into the model weights, greatly reducing errors and eliminating the need to manually teach the model about the data. The model effectively speaks the “language” of aviation databases.

Equally important, AeroGenie’s training covered 46k+ distinct columns across the aviation domain. It was taught the meaning and usage of fields ranging from airport codes and weather codes to engine cycle counts and delay reasons. Each column’s context (data type, typical values, relationships) is captured through the training examples. This breadth allows the system to interpret user questions that reference domain concepts (e.g. “tail numbers”, “ETOPS incidents”, “turnaround time”) and figure out which table and column those refer to, even if the actual column names are cryptic. The result is precision at scale – the ability to confidently navigate a schema of unparalleled size.

Finally, AeroGenie’s models were fine-tuned with careful evaluation to reach top-tier performance. During development, over 73,000 validation questions (unseen during training) were used to measure accuracy, leading to iterative improvements. The final validated accuracy of 98.7% means that out of 1,000 natural language questions about aviation data, 987 yield a correct SQL query and result – a level of trust essential for executive use. For comparison, most academic text-to-SQL benchmarks consider 80–90% a great achievement [source], and even advanced commercial systems hover around 90% on real BI scenarios [source]. AeroGenie’s near-perfect accuracy redefines what’s possible when an NLP system is deeply specialized for its domain. It instills confidence that queries will be answered correctly, which is crucial when decisions about safety, revenue, or operations are on the line.

Custom LLM Ensemble and Reranking Mechanism

Building a reliable NL-to-SQL system for aviation required more than just one large language model – it took an ensemble of three custom-tuned LLM variants and a clever re-ranking strategy to ensure both accuracy and robustness. AeroGenie’s architecture can be thought of in layers:

Primary Query Generator – Fine-Tuned LLM: The first component is a powerful LLM fine-tuned on ~300k question-to-SQL pairs. This model takes a natural language question (augmented with any retrieved context) and generates one or several candidate SQL queries that could answer the question. Fine-tuning at this scale (300k examples) on aviation and similar database queries teaches the model the common patterns of SQL in this domain – from simple SELECT-FROM-WHERE clauses to complex JOINs across multiple tables. The model learns not just general SQL syntax, but the specific “shape” of SQL queries that are correct for aviation data questions. By the end of training, the primary model can produce a valid SQL query for the vast majority of inputs on its first attempt.

Re-Ranker and Validator – Secondary LLM: Generating a SQL query is only half the battle; we also need to ensure it’s the best and most precise query for the user’s intent. AeroGenie employs a second LLM (and associated algorithm) as a re-ranking engine, fine-tuned on an additional 250k Q&A pairs specifically for judging and improving query outputs. This component might take multiple candidate SQL queries (or a SQL plus variations) and evaluate them against the question and known data patterns. It uses a proprietary scoring mechanism to pick the SQL that is most likely to be correct and complete. Essentially, this LLM acts as a “critical eye” – much like an expert checking the query for accuracy, proper filtering, and edge cases – and can suggest adjustments if needed. The re-ranker is trained on examples of correct vs. incorrect SQL for a given question, so it has learned to spot subtle issues (e.g. missing a date filter, or using the wrong join key) and prefer the solution that covers the question fully. This dramatically reduces the chance of a plausible-but-wrong query slipping through. It’s akin to having a second opinion on every query the first model writes.

Auxiliary Context Handler / Short-Term Memory: The third model variant in AeroGenie’s ensemble is focused on context management – essentially ensuring that the system maintains coherence in a conversation and correctly applies any short-term memory of previous queries. In practical use, analysts might ask follow-up questions like “Now show this by month” after an initial query. AeroGenie’s design uses this auxiliary module to handle such contextual follow-ups efficiently. It can incorporate the context of recent queries (what tables or filters were used, etc.) without having to recompute everything from scratch. This context module is lightweight and optimized for speed, which contributes to the system’s sub-second responsiveness. By keeping only the relevant recent info in memory, it ensures quick turnaround for iterative queries. (If a conversation is voice-driven, this component is analogous to the voice assistant remembering the subject of the last question.)

Together, these three LLM-based components create a pipeline that is both highly accurate and fast. The primary model brings deep domain knowledge to generate answers, the re-ranker provides an extra layer of precision and guardrails, and the context handler ensures smooth interaction for the user. This ensemble was extensively tested – over 100k+ natural language queries and their results were verified – to fine-tune the cooperation between models. The outcome is a system that behaves with the rigor of a rule-based expert system, but the flexibility of a neural network, thanks to this multi-stage design.

Notably, this approach of using LLMs as both a solver and a checker aligns with emerging best practices in AI-assisted coding and querying. It’s comparable to having one AI agent write a solution and another critique it – a strategy known to significantly reduce errors. AeroGenie’s innovation was applying this at scale to the aviation SQL domain and training the re-checker on the specific kinds of mistakes an aviation query might encounter. The result is an extremely low error rate and the virtual elimination of nonsensical or hallucinated SQL. In technical terms, the system maximizes precision without sacrificing recall: it very rarely produces a wrong query (thanks to strict re-ranker filters), yet through extensive training it has learned to handle virtually every valid query a user might ask.

Semantic Schema Retrieval with Embeddings

One of the breakthrough features of AeroGenie is its embedding-powered semantic search stack that underpins understanding of the aviation database schema. This component is crucial when dealing with 1,100+ tables and 46k columns – far too many for any model to brute-force scan on every question. Instead of feeding the entire schema to the model (which would be impossible due to context length and would confuse the model), AeroGenie performs a clever first-pass retrieval to narrow the scope.

Here’s how it works: when a user asks a question, the system first converts the question into a dense vector embedding – essentially a mathematical representation of the question’s meaning. Simultaneously, every table name, column name, and even descriptive metadata of the aviation database has been pre-encoded as vectors and indexed in a high-speed in-memory vector database (using Redis for its k-Nearest Neighbors search capability). The user’s query embedding is then matched against this vector index to find the closest schema elements. In plain terms, the system finds which tables and columns are semantically related to the question by measuring embedding similarity. This kNN vector search returns a handful of top candidates in just a few milliseconds [source]. For example, if the question is “How many flights were delayed due to weather this month?”, the retrieval might return the Flights table, Delays table, and columns like delay_reason, weather_code, departure_time, etc., because their embeddings are similar to the query’s embedding.

Only this small subset of relevant schema (perhaps the top 5–10 tables/columns) is then fed into the LLM query generator. By paring down the schema context to only what’s relevant, AeroGenie dramatically simplifies the model’s task – it doesn’t have to consider thousands of unrelated fields. This approach aligns with advice from industry experts: the only way to get accurate SQL on huge schemas is to first “pare down the schema” via a vector database search and then include just that in the prompt for SQL generation [source]. In effect, AeroGenie’s embedding retriever serves as a focused memory, ensuring the LLM is grounded in the real schema. It completely avoids the common pitfall of missing context – our model never has to guess table or column names because it’s always given the likely correct ones upfront [source].

Technically, the embeddings used are custom-trained for the aviation domain. Rather than using a generic embedding model, AeroGenie’s team fine-tuned embeddings (based on a state-of-the-art language model architecture) to capture the semantics of aviation data. This means that two columns that are conceptually related (e.g., tail_number and aircraft_id) have high cosine similarity in the vector space, even if their names don’t literally match. The vector search in Redis uses these embeddings to yield a semantic match, not just a textual one. For instance, a query mentioning “fuel burn” might retrieve a column named fuel_flow_rate because the model has learned those concepts are related, even though the words differ.

The retrieval step is also tuned for high precision over recall. In other words, it’s calibrated to favor returning only the most relevant tables/columns with very few false positives. This prevents irrelevant tables from cluttering the prompt and confusing the SQL generator. By fine-tuning similarity thresholds, AeroGenie achieves a laser-focused context: in testing, the retrieved context almost always includes the necessary pieces for the query and almost nothing extraneous. This design is critical given the scale of the schema – high precision ensures that even with tens of thousands of columns, the system picks the right ones quickly. Relevance re-ranking is applied on the retrieval results to make sure the final context fed to the LLM is not just based on raw similarity scores, but also on business logic (e.g., prefer a column that has numeric data if the question asks for a “how many” or “average”). This level of nuance in the retriever prevents many errors and accelerates query generation because the model isn’t bogged down by irrelevant info.

To illustrate, consider an analyst asks: “What is the average turnaround time for Boeing 737s at JFK in winter vs summer?” The retrieval engine will likely surface: the Flights table (because it contains flight records), the Turnaround_Time field (from, say, a ground ops table), possibly a Aircraft or Fleet table (to filter type Boeing 737), an Airport table or code (for JFK), and a Date/Season reference. All those pieces come from different tables, but AeroGenie’s embedding search finds them in an instant. Those schema snippets are provided to the LLM, which then easily composes the SQL knowing exactly which tables to join and which filters (aircraft type, airport code, month range for seasons) to apply. If the embedding search wasn’t there, the model might not realize it needs, for example, the fleet table to get aircraft type – but because the relevant table is provided, the model naturally includes the join. This tight coupling of retrieval and generation is what allows AeroGenie to operate on a scale (1100+ tables) that would otherwise be untenable for an NL-to-SQL system.

Lastly, it’s worth noting the efficiency of this approach. Vector searches on an index of 46k items in Redis are extremely fast – typically on the order of milliseconds – meaning this retrieval step doesn’t introduce noticeable latency [source]. Modern vector databases are designed for exactly this kind of use case, where you trade a tiny bit of preprocessing (embedding the data) to enable lightning-fast semantic lookups. By leveraging this, AeroGenie achieves its hallmark sub-second response times. Essentially, the heavy lifting of schema understanding is done ahead of time, and query-time computation is kept minimal. This design showcases practical engineering: it combines the strength of pre-trained embeddings and real-time search so that users experience no lag between asking a question and getting results.

Performance and Accuracy at Scale

Delivering high performance in terms of both speed and accuracy was a top priority for AeroGenie’s design – especially because it’s meant for enterprise use by CTOs, data scientists, and analysts who demand reliability. The system’s recent test results speak volumes: 98.7% accuracy on 73,000 validation questions, with very low training (0.086) and validation (0.073) loss values, indicating a well-generalized model. To put this in context, achieving near-99% accuracy in text-to-SQL is almost unprecedented [source], given the complexity of real-world queries. Many academic challenges and even commercial benchmarks still report much lower accuracy due to diverse schema and queries. AeroGenie’s performance was enabled by its domain specialization and rigorous training regimen described earlier, effectively eliminating the typical errors through an ensemble and retrieval approach.

However, accuracy means little if the system is too slow for interactive use. Here, AeroGenie shines as well: query responses are typically delivered in under one second end-to-end, even for complex multi-table joins. Several design choices contribute to this snappy performance:

- In-Memory Vector Index: By using Redis (an in-memory data store) for the vector search, schema retrieval is extremely fast – effectively a constant-time look-up that doesn’t grow noticeably with schema size. Whether the database has 100 tables or 1000 tables, the retrieval step feels instantaneous to the user [source]. This ensures that even as the aviation data warehouse grows, users won’t experience slowdowns when asking questions.

- Optimized Context Window: AeroGenie’s use of short-term memory for context means that the prompt sent to the LLM is kept minimal – often just the question and a compact schema snippet or examples. This not only improves accuracy (by reducing distraction) but also speed, because smaller prompts lead to faster inference times on the model. Essentially, the system avoids any unnecessary tokens in the LLM input, making the generation step as efficient as possible. It’s akin to having a very focused conversation with the AI, rather than dumping a whole encyclopedia into the prompt.

- Model Efficiency and Size: The custom LLMs underpinning AeroGenie were selected and tuned with deployment in mind. They are large enough to capture the complexity of SQL generation, but not overly bloated. This means they can run on modern server hardware (with GPU acceleration) quickly. The ensemble approach also allows workload sharing – the primary model does most of the heavy computation, while the re-ranker model is a bit smaller and only kicks in to evaluate outputs. This staged pipeline prevents any single model from becoming a bottleneck. In effect, it’s a form of load balancing the cognitive work among models.

- Concurrency and Caching: In a scenario with many users or repeated questions, AeroGenie can take advantage of caching at multiple layers. Frequently asked questions or their SQL translations can be cached (after the first time, subsequent ones are instantaneous). Additionally, because the system is deployed on the client’s database, it can leverage the database’s caching mechanisms for query results. If one user asks “How many flights in 2024?” and then another user asks a similar aggregate, the result might be served from cache. The system’s architecture is thread-safe and can handle concurrent queries, making it suitable for enterprise environments where dozens of analysts might be querying simultaneously.

A key aspect of performance in NL-to-SQL systems is robustness – how well the system handles edge cases or ambiguous queries. AeroGenie’s high accuracy is not just an average-case metric; it also has a low variance in performance. Thanks to the re-ranker and the schema-awareness, it’s resilient against tricky cases that might trip up other models. For instance, if two columns have similar names (a common source of confusion), the system’s embedding context plus re-ranking logic ensures it picks the correct one (the re-ranker might even simulate executing both in its head and prefer the one that matches expected output patterns). This kind of error mitigation is why AeroGenie can boast not just a high accuracy percentage, but an ability to consistently meet user intent. In testing with over 100k diverse questions, including long multi-part questions and colloquially phrased queries, the system was able to produce valid and correct SQL in the vast majority of cases.

It’s also worth mentioning that AeroGenie’s approach of combining retrieval with generation inherently contributes to reliability. As noted earlier, providing business-specific context and schema details is essential [source] – AeroGenie does this systematically every time. Other systems that rely purely on an LLM’s memory can falter especially on large schemas; by contrast, our system treats each query as an open-book exam where it can look up the schema specifics relevant to the question. This means that even if the underlying data schema evolves over time (new tables added, columns renamed, etc.), AeroGenie can adapt with minimal re-training: the embedding index is updated with new schema info and the system continues to retrieve context correctly. The models were trained to handle a wide range of schema inputs, so they remain effective as the data grows. This adaptability further future-proofs performance – the accuracy remains high and the speed stays constant, even as the system scales to more data.

In summary, AeroGenie achieves a rare combination in AI systems: near-human accuracy in understanding and translating questions, coupled with real-time interactivity. For a CTO or data leader, this means less time validating queries or waiting for results, and more time acting on insights. For the end-user analyst or executive, it transforms the experience from slogging through SQL code or back-and-forth requests to simply asking and immediately receiving answers.

Deployment, Security, and Integration

Enterprise adoption of AI tools hinges not only on model performance but also on how well the system integrates with existing workflows, maintains security, and delivers outputs in useful formats. AeroGenie was designed from the ground up with these considerations in mind, making it as practical as it is advanced.

Private-by-Design Deployment: Unlike many cloud-based AI services, AeroGenie is deployed in a separate high-performance cloud environment, entirely decoupled from your operational database. All intelligent processing — including schema embedding, column retrieval, and natural language query generation — takes place in this secure, isolated AI layer. Critically, AeroGenie does not access or interact with your actual data. It only generates the SQL query, which is then executed within your infrastructure or secure database environment.

The results of that query are displayed exclusively within the AeroGenie user interface, which is end-to-end encrypted and accessible only to the authorized user. At no point is your aviation data transferred to, processed in, or stored by AeroGenie’s cloud environment. This architecture ensures that sensitive operational and regulatory data never leaves your perimeter, maintaining full compliance with data residency, airspace sovereignty, and aviation-grade privacy standards.

AeroGenie can be hosted in secure VPCs, dedicated cloud instances. Even schema fine-tuning is performed using metadata only (not actual data). This approach addresses one of the most pressing concerns around “AI in the loop”: you gain the speed and intelligence of large language models without exposing proprietary data — ever.

Adaptable to Any Schema: Every aviation business has its own unique databases. AeroGenie comes with the ability to fine-tune itself to any new database schema quickly – and importantly, without requiring any of the client’s actual data values. It only needs a lightweight JSON specification of the schema (tables, column names, and perhaps the first few sample rows or data types – essentially the “top 5 rows” of each table as a head sample). With that, it can update its internal embeddings and even further train the model on the new schema structure. This means that onboarding AeroGenie to a new airline’s data warehouse, or an aircraft manufacturer’s maintenance database, is a matter of hours or days, not months. The model doesn’t need to see historical data or sensitive records; it learns the shape of the data (schema) and can already understand questions against it by leveraging its existing aviation knowledge. This approach protects data privacy (only schema meta-data is used) and speeds up deployment dramatically. In effect, AeroGenie can become an expert on your custom database schema with minimal effort, just by reading a summary of your database structure.

Integration with Existing Tools: AeroGenie is not a black-box silo – it’s built to integrate with the tools analysts and data scientists already use. For instance, if an analyst prefers working in a BI dashboard like Power BI, Tableau, or a Jupyter notebook, they can use AeroGenie as a query assistant to generate SQL, and then copy that SQL directly into their tool. The system provides a clear SQL output for every question (viewable and editable), so technical users retain full control and transparency. This fosters trust: when the CTO or data engineer can see the SQL and verify it or tweak it, they are more likely to adopt the tool in production workflows. It also means AeroGenie can be used to accelerate the development of analytics dashboards and reports – instead of writing complex SQL by hand for each new chart, a developer can ask AeroGenie and get the SQL instantly, then refine or plug it into the dashboard.

On the other hand, for non-technical stakeholders (managers, executives, operations personnel), the system provides a more automated integration: it can generate PDF reports on the fly in response to queries. These reports can include visualizations like charts, graphs, and tables. AeroGenie supports over 100 types of charts through an integrated visualization engine. For example, a user could ask, “Show me a monthly breakdown of flight delays by cause for 2025” and AeroGenie will not only produce the SQL and execute it, but also render a multi-series bar chart or pie charts for each cause, and compile a polished PDF report. The charts are customizable in style and format (e.g., colors, labels, company branding) as per the client’s needs. This feature essentially turns natural language questions into full business intelligence outputs in one step. It’s easy to see the value: executives get immediate, presentation-ready insights without a data analyst manually preparing slides or visuals. Moreover, because the system runs on the live database, the results are always up-to-date and can be refreshed simply by asking again.

User Authentication and Access Control: Since AeroGenie sits on top of the client’s database, it also integrates with existing authentication systems. It can be configured so that users only get answers for data they have permission to see. If a certain department’s data is off-limits to a user, any queries touching that data can be refused or sanitized. The system can use the database’s own access controls or an SSO/LDAP integration to ensure compliance with internal data governance. This level of enterprise integration is critical – it means deploying AeroGenie doesn’t introduce a new security loophole; it abides by the same rules as your database.

Maintenance and Monitoring: AeroGenie includes monitoring hooks to log queries and usage (without logging sensitive data content) so that data teams can track how it’s being used, identify popular queries, or detect any potential misuse. It’s designed to be maintainable by the client’s IT or data engineering team, with clear documentation and controls for updating the schema embeddings or fine-tuning further if needed. And since it’s all running in the client environment, the team has full control over uptime and performance (no dependency on an external service’s availability).

In summary, AeroGenie doesn’t just deliver cutting-edge AI querying in a vacuum – it fits into the real-world ecosystem of enterprise IT. It provides the speed and ease-of-use of a modern AI assistant while respecting the practical necessities of data governance, security, and interoperability. Whether used by a data scientist in a development environment or by an executive in a web UI, it transforms natural language into tangible results securely and seamlessly.

Conclusion

AeroGenie represents a leap forward in how aviation industry professionals can interact with their data. By combining advanced large-language models with domain-specific training and a high-precision retrieval mechanism, it achieves what was previously thought unattainable – the ability to ask complex questions of a vast aviation database and receive an accurate answer (and even a visual report) in a matter of seconds. It brings the convenience of a voice assistant and the rigor of an SQL expert together in one system, speaking the language of both the user and the database.

For CTOs and technology leaders, AeroGenie offers a way to dramatically enhance data accessibility without compromising governance or requiring months of new development. It’s an AI system that augments your existing data infrastructure, making it smarter and more user-friendly. Data scientists and analysts will find that routine querying and report generation can be accelerated multifold – mundane SQL writing is handled by the AI, freeing up human experts to focus on interpretation and strategy. Aviation analysts can dive into data trends and operational metrics through plain English questions, exploring hypotheses at the speed of thought rather than the speed of coding.

The results seen – 98.7% accuracy, sub-second responses, and seamless handling of thousands of schema elements – are not just engineering feats; they translate to real business impact. They mean decisions can be made faster and with more confidence in their factual basis. When someone in operations asks “Why were departure delays higher last week?”, instead of waiting days for an analyst to pull data, AeroGenie can produce the answer and a chart in moments, perhaps sparking follow-up questions that can be immediately explored. This kind of fluid, inquisitive interaction with data can foster a more data-driven culture in organizations.

Moreover, AeroGenie stands out by addressing the oft-cited gap between AI research benchmarks and real-world performance [source]. It demonstrates that with the right mix of fine-tuning, retrieval, and system design, it’s possible to surpass the usual limitations (context confusion, schema complexity, etc.) that have restrained text-to-SQL solutions. The system doesn’t replace the database or the existing BI tools – it supercharges them, acting like an intelligent middle layer that speaks human on one side and SQL on the other.

In the words of one industry expert, achieving high accuracy in text-to-SQL requires feeding the model with the right context and constraints [source] – AeroGenie has embodied this principle to perfection for aviation data. It provides the context, applies the constraints (through ontologies in effect, via schema and re-ranker), and thus prevents the AI “hallucinations” that previously made people skeptical of AI-driven queries. The trust built through its private deployment and transparent SQL outputs further ensures that stakeholders see it as a reliable co-pilot, not a mysterious black box.

Looking ahead, AeroGenie’s approach could be extended to other domains (finance, healthcare, etc.) with similar success, proving that the future of data analytics is conversational, intelligent, and domain-aware. But today, for the aviation world, AeroGenie is setting the standard. It turns the complex task of querying large-scale aviation datasets into a smooth dialogue between human and computer. In doing so, it doesn’t just answer questions – it empowers professionals to explore data in new and profound ways, grounded in the cutting-edge of AI and the real needs of the industry.

AeroGenie is not just a tool, but an AI partner for aviation analytics – one that understands your questions, knows your data, and delivers insights at the speed of thought.

Aviation Maintenance Trends That May Gain Momentum in Uncertain Circumstances

Aircraft are staying in service longer, supply chains are a powder keg, and the tech is evolving overnight. Discover the maintenance trends gaining momentum and what they mean for operators trying to stay airborne and profitable.

February 2, 2026

ePlane AI at Aircraft IT Americas 2026: Why Aviation‑Native AI Is Becoming the New Operational Advantage

The aviation industry is entering a new phase where operational performance depends not just on digital tools, but on intelligence.

October 2, 2025

Choosing the Right Aircraft Parts with Damage Tolerance Analysis

The future of aviation safety is all about the parts. Authentic, traceable parts bring optimal damage tolerance and performance to fleets for maximum safety and procurement efficiency.

September 30, 2025

How to Enter New Aviation Markets: The Complete Guide for Parts Suppliers

Breaking into new aviation markets? Learn how suppliers can analyze demand, manage PMA parts, and build airline trust. A complete guide for global growth.