AeroGenie — Seu Copiloto Inteligente.

Tendências

Categories

Why the Autopilot Metaphor Falls Short in Comparing AI in Medicine and Aviation

Why the Autopilot Metaphor Falls Short in Comparing AI in Medicine and Aviation

A commercial airline pilot descending through near-zero visibility may hand control to an automated system designed specifically for such conditions. The landing is executed flawlessly, passengers remain safe, and the pilot acknowledges the success with confidence. This scenario exemplifies technology performing as intended. Observing this, a physician aboard the flight might wonder when medicine will similarly entrust automation with its most complex diagnoses and decisions. While this comparison is compelling, it ultimately proves misleading when applied broadly to healthcare.

The Limits of the Aviation Analogy

In aviation, autopilot and autoland systems serve narrowly defined and well-understood functions. The operational parameters are fixed: the runway is predetermined, the physics governing flight are consistent, and the systems undergo rigorous testing under controlled conditions. Importantly, automation in aviation does not replace the pilot; it is employed sparingly, under explicit protocols, and always with human oversight. In fact, fewer than 1% of landings rely on autopilot technology—not due to technological shortcomings, but because aviation operates within a disciplined, standardized, and highly regulated framework.

Medicine, by contrast, lacks these characteristics. The physician’s analogy assumes that medicine’s most difficult moments resemble foggy landings: infrequent, clearly defined, and resolvable through sufficient data and algorithmic precision. However, the reality is far more complex. The most challenging issues in healthcare are not merely technical problems; they are ambiguous, evolving, and deeply human. Diagnoses cannot be equated to runways, patients are not system states, and clinical care is not governed by a single, invariant set of rules.

Complexity and Accountability in Clinical Care

When automation fails in aviation, investigations typically identify specific causes such as sensor errors, software glitches, or breakdowns in human-machine interaction. In medicine, failures are rarely so straightforward. They often arise from a confluence of factors including social context, access to care, trust, bias, values, timing, fear, denial, financial pressures, and knowledge gaps. These elements are not mere background noise to be filtered out; they constitute the very fabric of clinical care.

The analogy also neglects a critical distinction in system development. Aviation automation is engineered within a tightly regulated industry, shaped by decades of accident investigations, standardized designs, and a global safety culture. Medical AI, conversely, is frequently trained on incomplete or biased datasets and influenced by commercial incentives that emphasize scale and speed over nuance and safety. These systems do not emerge from a neutral environment.

AI in Medicine: Hype, Reality, and Regulation

As artificial intelligence’s role in healthcare expands, the focus in 2026 has shifted from innovation to practical integration, driven by workforce shortages and the imperative for greater efficiency. Nonetheless, skepticism persists regarding whether current AI models can ever replicate the human-like reasoning implied by the autopilot metaphor. Experts contend that artificial general intelligence (AGI) remains beyond reach, while market pressures intensify for companies to demonstrate tangible real-world benefits rather than theoretical potential.

Simultaneously, regulatory challenges are mounting. New legislation, such as California’s requirement for AI systems to detect and interrupt conversations about self-harm, underscores the complexities of deploying AI in sensitive and unpredictable environments. Industry competitors are striving to link AI more closely with deterministic systems to enhance reliability and outcomes, yet the fundamental differences between aviation and medicine endure.

Conclusion

The autopilot metaphor, though appealing, oversimplifies the realities of medicine. Unlike aviation, healthcare’s most difficult problems are neither bounded nor predictable, and they resist straightforward automation. As AI becomes increasingly embedded in clinical care, it is essential to acknowledge these distinctions and to approach technological integration with humility, caution, and a clear understanding of both its potential and its limitations.

The Impact of Aviation Leasing on Modern Lifestyles

Differences in Delta Air Lines’ Premium Cabin Across Widebody Aircraft

Tajik Air to Lease Two Airbus Aircraft

APEX TECH 2026: Engineering the Next Phase of Connected Travel

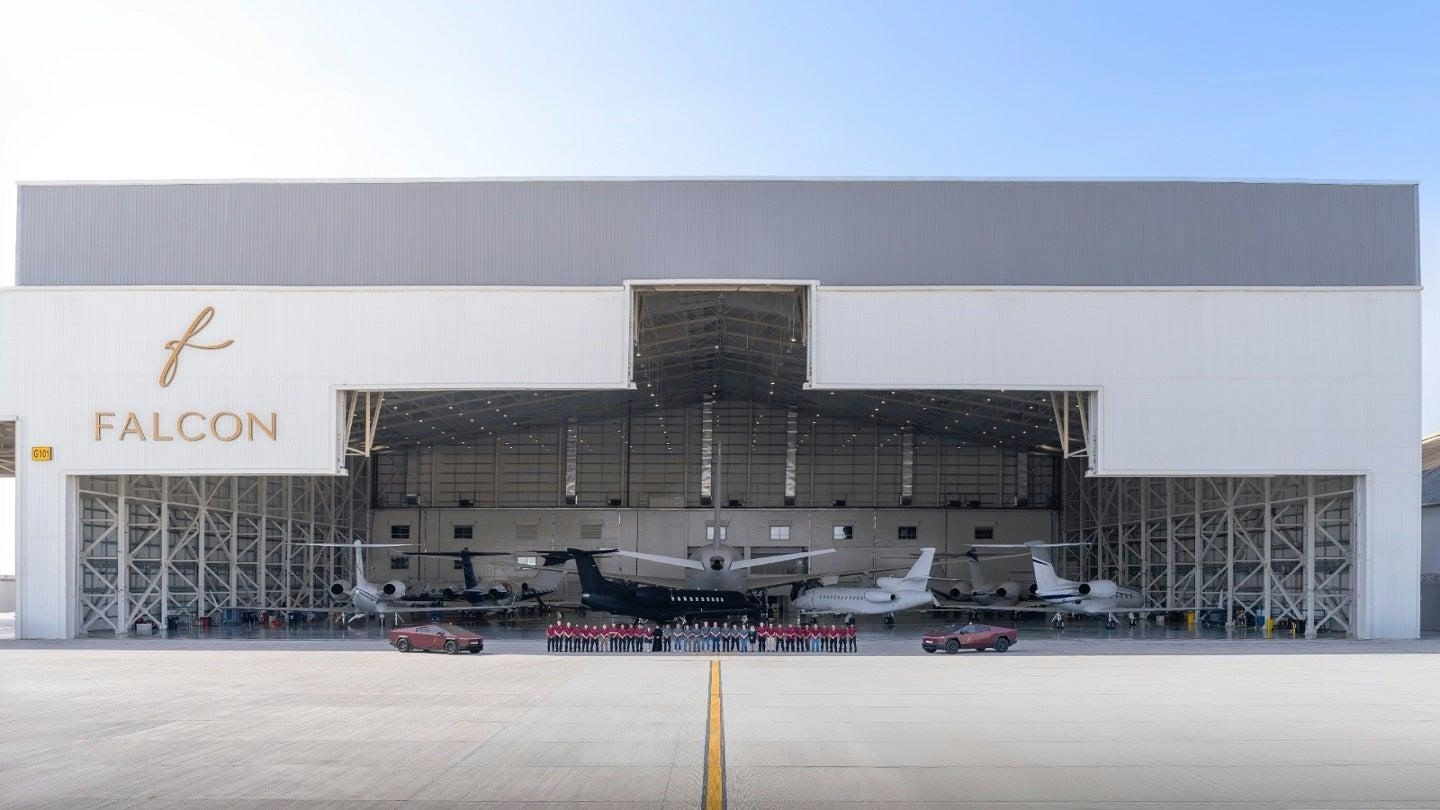

Turnaround Time Consistency to Shape MRO Competitiveness in 2026

Electric air taxis: Paris’ future in the skies and what it means for luxury travel

Cavorite X7 Hybrid-Electric eVTOL Introduces Split-Wing Design to Enhance Takeoff and Speed

Joby Aviation's Commitment to Safety in Electric Air Taxis

Morocco Advances Aviation Sector with Euroairlines Investment in Infrastructure and AI

Amazon Prime Air Airbus A330 Makes Emergency Landing in Cincinnati After Bird Strike Causes Engine Fire